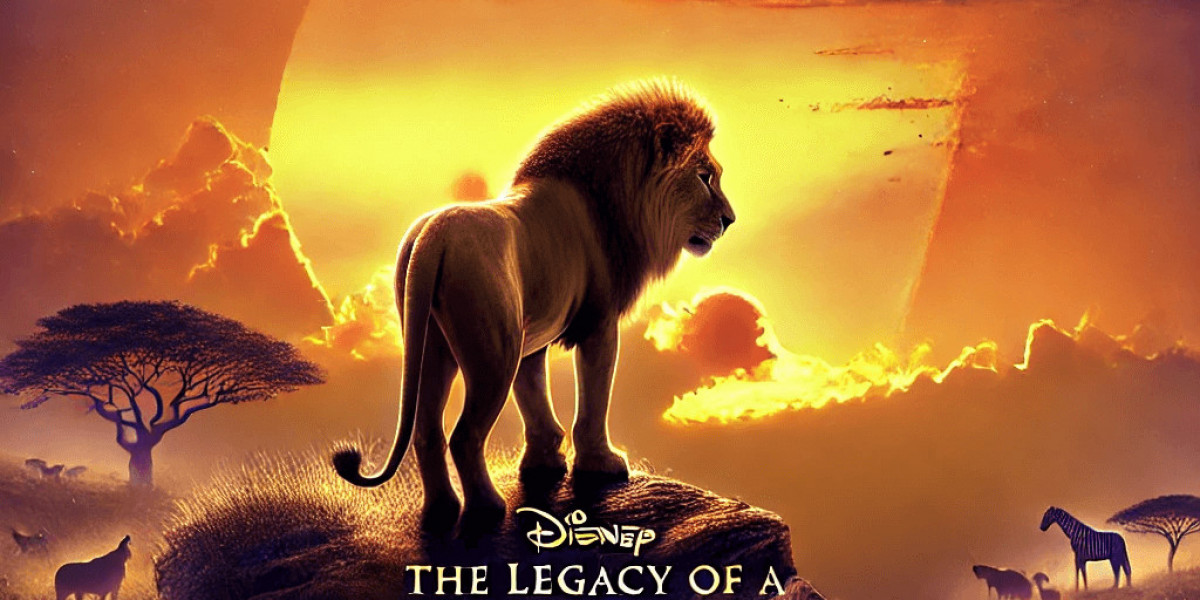

Mufasa, the mighty lion of Disney’s The Lion King, is more than just a character—he is a symbol of wisdom, strength, and sacrifice. His influence on the Pride Lands and his son, Simba, cements his status as one of Disney’s most iconic and beloved leaders. In this blog, we explore Mufasa’s legacy, his leadership qualities, and the life lessons he imparted.

The Legacy of Mufasa

Mufasa, voiced by James Earl Jones, is introduced as the noble and just ruler of the Pride Lands. He embodies the ideal leader—one who is strong yet compassionate, powerful yet just. His reign brings balance to the Circle of Life, ensuring that every creature in the savanna thrives under his watchful eyes.

His tragic death at the hands of his brother, Scar, is one of the most heartbreaking moments in Disney history. However, Mufasa’s influence extends beyond his time on Earth. His spirit continues to guide Simba, reminding him of his responsibilities and his rightful place as king.

Mufasa’s Leadership Qualities

Mufasa’s leadership is defined by several key traits:

Wisdom: He teaches Simba about the Circle of Life, ensuring he understands the delicate balance of nature.

Strength: As the ruler, he protects his kingdom from threats, demonstrating bravery in the face of danger.

Compassion: Unlike Scar, Mufasa rules with kindness, showing respect for all creatures in the Pride Lands.

Mentorship: Even after his death, he continues to mentor Simba from the stars, reminding him, "Remember who you are."

Life Lessons from Mufasa

Mufasa’s words and actions offer invaluable lessons for all of us:

Take Responsibility: Leadership is not just about power; it’s about serving others and maintaining balance.

Face Your Fears: Simba initially runs from his past, but Mufasa’s guidance helps him embrace his destiny.

Honor Your Legacy: Mufasa’s memory reminds us that we carry the lessons and values of those who came before us.

Mufasa’s Lasting Impact

Even decades after The Lion King was released, Mufasa remains a legendary figure in pop culture. His wisdom and leadership continue to inspire audiences of all ages. Whether in animated form or in the live-action adaptation, his presence is a testament to the power of great storytelling.

Mufasa is not just a lion king—he is the heart of The Lion King. His legacy lives on in Simba, in the Pride Lands, and in the hearts of fans around the world.